import numpy as np

from decimal import *

import matplotlib.pyplot as plt

import seaborn as sns

import scipy

from scipy.integrate import solve_ivp

import sys

sys.path.append('../../')

import RandomMatrix as RM

%matplotlib inline

sns.set()

from math import pi

PI = Decimal(pi)

In the previous section, we discussed the distribution of eigenvalue gaps for Gaussian Ensembles. In particular, we showed Wigner's surmise was a reasonable approximation to the distirbution's density. In this section, we will demonstrate how to compute the true underlying distribution for GUEs and their connection to the zeros of the Riemann Zeta function.

The density of the distribution of gaps between eigenvalues can be computed as the solution to the Painleve V ODE. We will follow the procedure prescribed by Alan Edelman and Per-Olof Persson in Numerical Methods for Eigenvalue Distribution of Random Matrices.

We say $\sigma$ satisfies the Painleve V differential equation if for $t > 0$

$$(t\sigma'')^2 - 4(\sigma - t\sigma')(t\sigma'-\sigma + (\sigma')^2) = 0 $$with the boundary condition

$$\sigma(t) \approx -\frac{t}{\pi} - \left( \frac{t}{\pi} \right)^2 \ \text{ as } t \rightarrow 0^+$$We can rewrite the ODE as

$$ \sigma'' = -\frac{2}{t}\sqrt{(\sigma - t\sigma')(t\sigma'-\sigma + (\sigma')^2)}$$We take the negative root because we want to satisfy the intial condition $\sigma''(t) \approx -\frac{2}{\pi^2} \ \text{ as } t \rightarrow 0^+$

Define $E(t)$ as

$$E(t) := \exp \left({\int_{0}^{\pi t} \frac{\sigma(s)}{s} ds} \right) $$then using the Fundamental Theorem of Calculus and the Chain Rule

$$E'(t) = E(t)\frac{\sigma(\pi t)}{\pi t} \pi = E(t)\frac{\sigma(\pi t)}{t}$$$$E''(t) = E'(t) \frac{\sigma(\pi t)}{t} + E(t) \frac{\sigma'(\pi t) \pi t - \sigma(\pi t)}{t^2} = \frac{E(t)}{t^2} \left(\sigma^2(\pi t) + \sigma'(\pi t) \pi t - \sigma(\pi t) \right) $$Let $p(t)$ be the density of the level spacings for GUE matrices. Then $p(t) = E''(t)$. If we can use the ODE solver to calculate values for $E(t)$, then we can easily compute values for $p(t)$ using the formula above. To assist with using the ODE solver to find $E(t)$ define $I(t)$ as

$$I(t) := \int_{0}^t \frac{\sigma(s)}{s} ds $$Note that

$$I'(t) := \frac{\sigma(t)}{t} $$We can rewrite $E$ as $E(t) = \exp \left( I(\pi t) \right)$. If $x=\frac{t}{\pi}$, then $E(x) = \exp \left( I(t) \right)$. We can use the ODE solver to compute values of $I$ with the system of ODEs

$$ \frac{d}{dt} \begin{pmatrix} \sigma \\ \sigma' \\ I \end{pmatrix} = \begin{pmatrix} \sigma' \\ -\frac{2}{t} \sqrt{(\sigma - t\sigma')(t\sigma' - \sigma + (\sigma')^2)} \\ \frac{\sigma}{t} \end{pmatrix} $$For the boundary condition, if we let $t_0$ be a positive number very close to $0$ then

$$\sigma(t_0) \approx -\frac{t_0}{\pi} - \left( \frac{t_0}{\pi} \right)^2 $$$$\sigma'(t_0) \approx -\frac{1}{\pi} - \frac{2t_0}{\pi^2} \approx - \frac{1}{\pi}$$$$I(t_0) = \int_{0}^{t_0} \frac{\sigma(s)}{s} ds \approx \int_{0}^{t_0} \frac{-\frac{s}{\pi} - \left( \frac{s}{\pi} \right)^2}{s} ds = \int_{0}^{t_0} -\frac{1}{\pi} - \frac{s}{\pi^2} ds = - \frac{t_0}{\pi} - \frac{t^2_0}{2 \pi^2} $$def f(t, y):

σ = y[0]

dσ = y[1]

dy0 = dσ

dy1 = -2/t * np.sqrt(max((σ-t*dσ)*(t*dσ - σ + (dσ)**2), 0))

dy2 = σ/t

return np.array([dy0, dy1, dy2])

t0 = 1e-15

tf = 9

Define the boundary conditions

y0_0 = -t0/np.pi-(t0/np.pi)**2

y0_1 = -1/np.pi-2*t0/np.pi

y0_2 = -t0/np.pi-t0**2/(2*pi**2)

y0 = np.array([y0_0, y0_1, y0_2])

sol = solve_ivp(f, (t0, tf) ,y0, max_step=.001)

σ = sol.y[0][100:]

dσ = sol.y[1][100:]

I = sol.y[2][100:]

t = sol.t[100:]

x = t/np.pi

E = np.exp(I)

p = E/x**2 * (σ**2 + x*np.pi*dσ - σ)

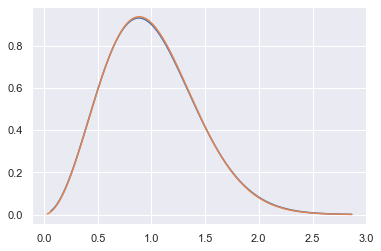

plt.plot(x[100:], p[100:]);

We can plot the density with Wigner's Surmise and see that Wigner's approximation is amazingly accurate, as expected from the experiments from last section.

gamma = lambda x: scipy.special.gamma(x)

def wigner_surmise(s, beta):

a = 2*(gamma((beta+2)/2))**(beta+1)/(gamma((beta+1)/2))**(beta + 2)

b = (gamma((beta+2)/2))**2/(gamma((beta+1)/2))**2

return a * (s**beta) * np.exp(-b*s**2)

plt.plot(x[100:], p[100:])

wigner_curve = wigner_surmise(x, 2)

plt.plot(x, wigner_curve);

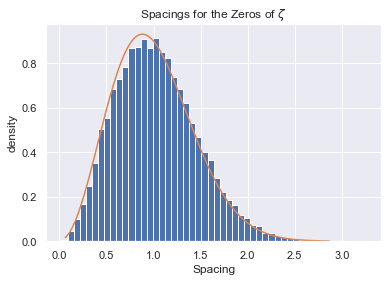

We will now emperically verify our density curve. However, we will unfold the spectra using the procedure suggested by Andrew Odlyzko in The 10^20-th zero of the Riemann zeta function and 175 million of its neighbors. Suppose you have $n$ eigenvalues $\{ \lambda_i \}_{i=1}^n$, then the level spacings $\{ \mu_i \}_{i=1}^{n-1}$ can be normalized to have mean 1 by scaling the differences like so

$$\mu_i = (\lambda_{i+1} - \lambda_i)\frac{\sqrt{4n - \lambda_i^2}}{2 \pi}$$def normalize(λ1, λ2):

# λ1 > λ2

return (λ1 - λ2)*(np.sqrt(4*N-λ2**2)/(2*np.pi))

Below we plot the level spacings for $200 \times 200$ GUE and compare the emperical distribution with the density curve found earlier.

trials = 5000

Δs = []

N = 200

for _ in range(trials):

A = RM.Generate_GUE(N)

λs, V = np.linalg.eigh(A)

λs.sort()

λs_middle = λs[round(N/4):round(3*N/4)]

eig_spaces = [normalize(λs_middle[i+1], λs_middle[i]) for i in range(len(λs_middle)-1)]

Δs.extend(eig_spaces)

plt.ylabel("Density")

plt.xlabel("$\lambda - \lambda'$")

plt.hist(Δs, bins=50, density=True)

plt.plot(x[100:], p[100:]);

The Riemann zeta function is defined as the analytic continuation of the series

$$\zeta(s) = \sum_{n=1}^{\infty} \frac{1}{n^s} $$where $s$ is a complex variable. The function satifies the following functional equation

$$\zeta(s) = 2^s \pi^{s-1} \sin(\frac{s \pi}{2}) \Gamma(1-s) \zeta(1-s) $$Note that if $s$ is a negative, even integer then $\sin(\frac{s \pi}{2}) = 0 \Rightarrow \zeta(s) = 0$. The same cannot be said about positive even integers because of the poles of the Gamma function. Negative, even integers are known as the trivial zeros of the Riemann zeta function. The Riemann hypothesis states that the non-trivial zeros of the zeta function have real part $\frac{1}{2}$. This hypothesis remain unproven today and is one of the most famous open problems in mathematics. Andrew Odlyzko computed hundreds of examples of non-trivial zeros on his website, which we will use in this section. It turns out that there is a connection between Random Matrix theory and the non-trivial zeros of the Riemann zeta function. The differences of normalized imaginary non-trivial zeros of the Riemann zeta function follows the same distribution as the normalized level spacings of eigenvalues of GUE matrices. In particular, if $s_0$ is a non-trivial zero and $\Im(s_0) = \gamma$, we can normalize these gammas to have mean 1 with the following scaling

$$\tilde{\gamma} = \gamma \frac{1}{2 \pi} \log(\frac{\gamma}{2 \pi})$$Below we import Odlyzko's data, normalize it, compute the differences, and plot it against the density curve found earlier in the section.

def get_diffs(γs):

γ_bar = [γ * (γ/(2*PI)).ln()/(2*PI) for γ in γs]

γ_diffs = np.diff(γ_bar)

γ_diffs_fl = [float(γ) for γ in γ_diffs]

return γ_diffs_fl

data1 = np.genfromtxt('zeros_group1.csv', delimiter=',', dtype=str)

γ1 = [Decimal(num) for num in data1]

γ1_bar = get_diffs(γ1)

data2 = np.genfromtxt('zeros_group2.csv', delimiter=',', dtype=str)

γ2 = [Decimal(num) for num in data2]

γ2_bar = get_diffs(γ2)

data3 = np.genfromtxt('zeros_group3.csv', delimiter=',', dtype=str)

γ3 = [Decimal(num) for num in data3]

γ3_bar = get_diffs(γ3)

Γ = γ1_bar.copy()

Γ.extend(γ2_bar)

Γ.extend(γ3_bar)

plt.title("Spacings for the Zeros of $\zeta$")

plt.ylabel("density")

plt.xlabel("Spacing")

plt.hist(Γ, bins=50, density=True)

plt.plot(x[100:], p[100:]);