from sympy import *

import scipy

import numpy as np

import matplotlib.pyplot as plt

import seaborn as sns

import sys

sys.path.append('../../')

import RandomMatrix as RM

%matplotlib inline

sns.set()

np.random.seed(140)

Suppose we have a 2 by 2 GOE with $X$ and $Y$ as the diagonal terms and $Z$ as the off diagonal term. Recall that $X,Y \sim N(0,1)$ and $Z \sim N(0, \frac{1}{2})$

X, Y, Z = symbols("X Y Z")

A = Matrix(np.array([[X,Z], [Z,Y]]))

A

We can easily find the characteristic equation for this general matrix

λ = symbols("λ")

characteristic_eq = det(A - λ*np.eye(2))

characteristic_eq

If we solve to find the eigenvalues and subtract the bigger eigenvalue from the smaller one we get the following expression for the difference between the eigenvalues, denoted by $\Delta$

λ_2, λ_1 = solve(characteristic_eq,λ)

Δ = λ_1 - λ_2

Δ

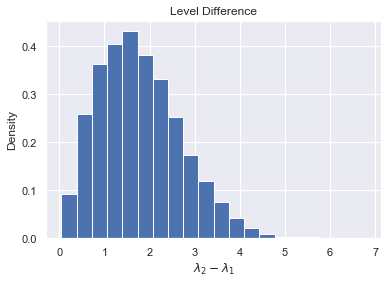

So $\Delta = \sqrt{(X-Y)^2 + 4Z^2}$. If we define $T=X-Y$, then $T \sim N(0, 2)$. We can also define $S=2Z$, and note $S \sim N(0,2)$. We can write $\Delta = \sqrt{T^2 + S^2}$. Thus $\Delta$ is the square root of the sum of squares for iid normals, which brings us to our main result

$$\Delta \sim \text{Rayleigh}(\sqrt{2})$$trials = 5000

Δs = []

for _ in range(trials):

A = RM.Generate_GOE(2)

λs, V = np.linalg.eigh(A)

λ_1, λ_2 = λs[1], λs[0]

Δs.append(λ_1 - λ_2)

plt.ylabel("Density")

plt.xlabel("$\lambda_2 - \lambda_1$")

plt.title("Level Difference")

plt.hist(Δs, bins=20, density=True);

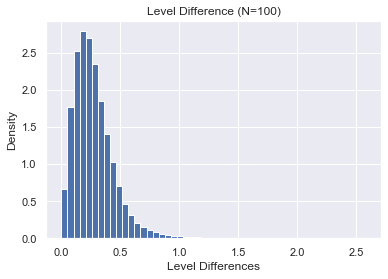

Suppose we want to study what heppens to the spaces between eigenvalues as $N \rightarrow \infty$. For now, let's examine what heppens for $N=100$.

trials = 1000

Δs = []

matrix_size = 100

for _ in range(trials):

A = RM.Generate_GOE(matrix_size)

λs, V = np.linalg.eigh(A)

eig_spaces = [λs[i+1] - λs[i] for i in range(matrix_size-1)]

Δs.extend(eig_spaces)

plt.ylabel("Density")

plt.xlabel("Level Differences")

plt.title("Level Difference (N=100)")

plt.hist(Δs, bins=50, density=True);

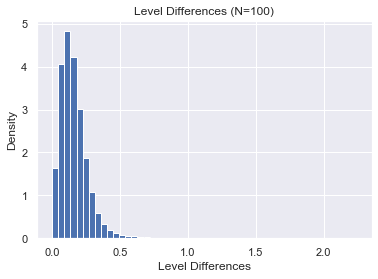

Next we'll try $N=300$.

trials = 1000

Δs = []

matrix_size = 300

for _ in range(trials):

A = RM.Generate_GOE(matrix_size)

λs, V = np.linalg.eigh(A)

eig_spaces = [λs[i+1] - λs[i] for i in range(matrix_size-1)]

Δs.extend(eig_spaces)

plt.ylabel("Density")

plt.xlabel("Level Differences")

plt.title("Level Differences (N=100)")

plt.hist(Δs, bins=50, density=True);

Clearly, and perhaps unsurprisingly, the spacings become smaller and tend towards 0. In order to study the behavior of spacings asymptotically, we need to "unfold" the eigenvalues so that the spacings have mean 1.

Suppose we have a collection of eigenvalues $\lambda_1 \leq \lambda_2 \leq ... \leq \lambda_n$ and we are interested in studying their level distances $\lambda_{i+1} - \lambda_i$. As seen above, these level distances will tend to 0 as the dimension of the Gaussian Ensemble gets large. Thus to get interesting results, we must scale (or "unfold") the eigenvalues so the mean spacing is equal to 1. To find this scaling factor, we use the results of the Wigner's Semicircle law section to define the mean cumulative spectral density

$$F_{N, \beta}(\lambda) = N \int_{-\sqrt{2 \beta N}}^{\lambda} \rho_{\sqrt{2 \beta N}}(t) dt $$Recall from the previous section the closed form solution to the indefinite integral

t = symbols("t")

N, β = symbols("N β", positive=True)

σ = 2/(pi*(2*β*N)) * sqrt((2*β*N) - t**2)

Λ_N_β = integrate(N*σ, t)

Λ_N_β

We can find a closed form expression for $F(\lambda)$

λ = symbols("λ")

F_λ = Λ_N_β.subs(t, λ) - Λ_N_β.subs(t, -sqrt(2*β*N))

F_λ

Thus we get

$ F_{N, \beta}(\lambda) = \begin{cases} 0 & \lambda \leq -\sqrt{2 \beta N} \\ \frac{N}{2} + \frac{1}{\pi \beta} \left(N \beta sin^{-1}(\frac{\sqrt{2}\lambda}{2 \sqrt{N \beta}}) + \frac{\lambda \sqrt{2N\beta - \lambda^2}}{2}\right) & \lambda \in (-\sqrt{2 \beta N}, \sqrt{2 \beta N}) \\ N & \lambda \geq \sqrt{2 \beta N} \end{cases}$

We can now define $\bar{\lambda}_i := F(\lambda_i)$. The level spacings of these new values will have unit mean in expectation. The intuition for this is straightfoward, suppose $\lambda_j$ is the $j$th largest eigenvalue. Then we expect $F(\lambda_j) = j$. So $F(\lambda_{j+1}) - F(\lambda_j) = j + 1 - j = 1$.

def F(eig, size, beta):

if eig <= -np.sqrt(2*beta*size):

return 0

if eig >= np.sqrt(2*beta*size):

return size

else:

# F_λ is defined outside this function

return float(F_λ.subs(β, beta).subs(N, size).subs(λ, eig).evalf())

Below is a demonstration of the unfolding procedure for a GOE. First we generate a $300 \times 300$ GOE and find its eigenvalues

matrix_size = 300

A = RM.Generate_GOE(matrix_size)

λs, V = np.linalg.eigh(A)

Next we find $\bar{\lambda}_i \ \forall i$

λ_bar = [F(eigenvalue, matrix_size, 1) for eigenvalue in λs]

We can verify that this collection of eigenvalues does indeed have level spacing with mean about 1.

eig_spaces = [λ_bar[i+1] - λ_bar[i] for i in range(matrix_size-1)]

np.mean(eig_spaces)

Below is the same demonstration but for a GUE

matrix_size = 300

A = RM.Generate_GUE(matrix_size)

λs, V = np.linalg.eigh(A)

λ_bar = [F(eigenvalue, matrix_size, 2) for eigenvalue in λs]

eig_spaces = [λ_bar[i+1] - λ_bar[i] for i in range(matrix_size-1)]

np.mean(eig_spaces)

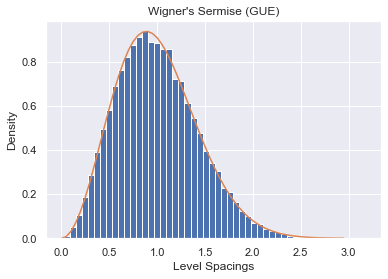

In 1956, E. P. Wigner surmised a formula for the density of level spacings of normalized eigenvalues. Wigner's Surmise says that the density of level distances for an unfolded set of eigenvalues can be approximated by

$$p_{\beta}(s) = a_{\beta} s^{\beta} e^{- b_{\beta} s^2} $$where

$$ a_{\beta} = 2 \frac{\left(\Gamma(\frac{\beta + 2}{2})\right)^{\beta + 1}}{\left(\Gamma(\frac{\beta + 1}{2})\right)^{\beta + 2}}, \ b_{\beta} = \frac{\left(\Gamma(\frac{\beta + 2}{2})\right)^{2}}{\left(\Gamma(\frac{\beta + 1}{2})\right)^{2}}$$Note this is an approximation and does not necessarily converge for large $N$.

gamma = lambda x: scipy.special.gamma(x)

def wigner_surmise(s, beta):

a = 2*(gamma((beta+2)/2))**(beta+1)/(gamma((beta+1)/2))**(beta + 2)

b = (gamma((beta+2)/2))**2/(gamma((beta+1)/2))**2

return a * (s**beta) * np.exp(-b*s**2)

We will test this approximation by generating multiple $100 \times 100$ GOEs and plotting the distribution of their unfolded eigenvalues.

matrix_size = 100

trials = 500

all_GOE_spaces = []

for _ in range(trials):

A = RM.Generate_GOE(matrix_size)

λs, V = np.linalg.eigh(A)

λ_bar = [F(eigenvalue, matrix_size, 1) for eigenvalue in λs]

eig_spaces = [λ_bar[i+1] - λ_bar[i] for i in range(matrix_size-1)]

all_GOE_spaces.extend(eig_spaces)

plt.ylabel("Density")

plt.xlabel("Level Spacings")

plt.title("Wigner's Sermise (GOE)")

plt.hist(all_GOE_spaces, bins=50, density=True)

t = np.arange(0., 3, 0.05)

wigner_curve = wigner_surmise(t, 1)

plt.plot(t, wigner_curve);

Now we do the same test for $100 \times 100$ GUEs

matrix_size = 100

trials = 500

all_GUE_spaces = []

for _ in range(trials):

A = RM.Generate_GUE(matrix_size)

λs, V = np.linalg.eigh(A)

λ_bar = [F(eigenvalue, matrix_size, 2) for eigenvalue in λs]

eig_spaces = [λ_bar[i+1] - λ_bar[i] for i in range(matrix_size-1)]

all_GUE_spaces.extend(eig_spaces)

plt.ylabel("Density")

plt.xlabel("Level Spacings")

plt.title("Wigner's Sermise (GUE)")

plt.hist(all_GUE_spaces, bins=50, density=True)

t = np.arange(0., 3, 0.05)

wigner_curve = wigner_surmise(t, 2)

plt.plot(t, wigner_curve);

We will discuss how to calculate the exact level densities for the $\beta = 2$ case in the next section.